mirror of

https://github.com/mailcow/mailcow-dockerized.git

synced 2026-02-18 15:16:25 +00:00

Compare commits

1378 Commits

2022-03a

...

feat/mTLS-

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

75eb1c42d5 | ||

|

|

a794c1ba6c | ||

|

|

b001097c54 | ||

|

|

9e0d82e117 | ||

|

|

6d6152a341 | ||

|

|

9521a50dfb | ||

|

|

24c4ea6f9e | ||

|

|

95ee29dd6d | ||

|

|

ca99280e5a | ||

|

|

73fdf31144 | ||

|

|

a65f55d499 | ||

|

|

a070a18f81 | ||

|

|

423211f317 | ||

|

|

39a3e58de6 | ||

|

|

c792f6c172 | ||

|

|

3a65da8a87 | ||

|

|

c92f3fea17 | ||

|

|

4d9c10e4f7 | ||

|

|

4f79d013d0 | ||

|

|

43ba5dfd09 | ||

|

|

59ca84d6ff | ||

|

|

7664eb6fb9 | ||

|

|

be9db39a64 | ||

|

|

2463405dfd | ||

|

|

ddc0070d3a | ||

|

|

fbc8fb7ecb | ||

|

|

ff8f4c31c5 | ||

|

|

b556c2c9dd | ||

|

|

785c36bdf4 | ||

|

|

d91c4de392 | ||

|

|

31783b5086 | ||

|

|

31a33af141 | ||

|

|

2725423838 | ||

|

|

b7324e5c25 | ||

|

|

da29a7a736 | ||

|

|

a0e0dc92eb | ||

|

|

016c028ec7 | ||

|

|

c744ffd2c8 | ||

|

|

adc7d89b57 | ||

|

|

2befafa8b1 | ||

|

|

ce76b3d75f | ||

|

|

dd1a5d7775 | ||

|

|

e437e2cc5e | ||

|

|

f093e3a054 | ||

|

|

5725ddf197 | ||

|

|

4293d184bd | ||

|

|

51ee8ce1a2 | ||

|

|

6fe17c5d34 | ||

|

|

7abf61478a | ||

|

|

4bb02f4bb0 | ||

|

|

dce3239809 | ||

|

|

36c9e91efa | ||

|

|

1258ddcdc6 | ||

|

|

8c8eae965d | ||

|

|

1bb9f70b96 | ||

|

|

002eef51e1 | ||

|

|

5923382831 | ||

|

|

d4add71b33 | ||

|

|

105016b1aa | ||

|

|

ae9584ff8b | ||

|

|

c8e18b0fdb | ||

|

|

84c0f1e38b | ||

|

|

00d826edf6 | ||

|

|

d2e656107f | ||

|

|

821972767c | ||

|

|

35869d2f67 | ||

|

|

8539d55c75 | ||

|

|

61559f3a66 | ||

|

|

412d8490d1 | ||

|

|

a331813790 | ||

|

|

9be79cb08e | ||

|

|

73256b49b7 | ||

|

|

974827cccc | ||

|

|

bb461bc0ad | ||

|

|

b331baa123 | ||

|

|

cd6f09fb18 | ||

|

|

c7a7f2cd46 | ||

|

|

a4244897c2 | ||

|

|

fb27b54ae3 | ||

|

|

b6bf98ed48 | ||

|

|

61960be9c4 | ||

|

|

590a4e73d4 | ||

|

|

c7573752ce | ||

|

|

93d7610ae7 | ||

|

|

edd58e8f98 | ||

|

|

560abc7a94 | ||

|

|

f3ed3060b0 | ||

|

|

04a423ec6a | ||

|

|

f2b78e3232 | ||

|

|

0b7e5c9d48 | ||

|

|

410ff40782 | ||

|

|

7218095041 | ||

|

|

4f350d17e5 | ||

|

|

b57ec1323d | ||

|

|

528077394e | ||

|

|

7b965a60ed | ||

|

|

c5dcae471b | ||

|

|

0468af5d79 | ||

|

|

768304a32e | ||

|

|

77e6ef218c | ||

|

|

464b6f2e93 | ||

|

|

57e67ea8f7 | ||

|

|

909f07939e | ||

|

|

a310493485 | ||

|

|

087481ac12 | ||

|

|

c941e802d4 | ||

|

|

39589bd441 | ||

|

|

2e57325dde | ||

|

|

2072301d89 | ||

|

|

b236fd3ac6 | ||

|

|

b968695e31 | ||

|

|

694f1d1623 | ||

|

|

93e4d58606 | ||

|

|

cc77caad67 | ||

|

|

f74573f5d0 | ||

|

|

deb6f0babc | ||

|

|

cb978136bd | ||

|

|

1159450cc4 | ||

|

|

a0613e4b10 | ||

|

|

68989f0a45 | ||

|

|

7da5e3697e | ||

|

|

6e7a0eb662 | ||

|

|

b25ac855ca | ||

|

|

3e02dcbb95 | ||

|

|

53be119e39 | ||

|

|

25bdc4c9ed | ||

|

|

9d4055fc4d | ||

|

|

d2edf359ac | ||

|

|

aa1d92dfbb | ||

|

|

b89d71e6e4 | ||

|

|

ed493f9c3a | ||

|

|

76f8a5b7de | ||

|

|

cb3bc207b9 | ||

|

|

b5db5dd0b4 | ||

|

|

90a7cff2c9 | ||

|

|

cc3adbe78c | ||

|

|

bd6a7210b7 | ||

|

|

905a202873 | ||

|

|

accedf0280 | ||

|

|

99d9a2eacd | ||

|

|

ac4f131fa8 | ||

|

|

7f6f7e0e9f | ||

|

|

43bb26f28c | ||

|

|

b29dc37991 | ||

|

|

cf9f02adbb | ||

|

|

b5a1a18b04 | ||

|

|

b4eeb0ffae | ||

|

|

48549ead7f | ||

|

|

01b0ad0fd9 | ||

|

|

2b21501450 | ||

|

|

b491f6af9b | ||

|

|

942ef7c254 | ||

|

|

1ee3bb42f3 | ||

|

|

25007b1963 | ||

|

|

f442378377 | ||

|

|

333b7ebc0c | ||

|

|

5896766fc3 | ||

|

|

89540aec28 | ||

|

|

b960143045 | ||

|

|

6ab45cf668 | ||

|

|

fd206a7ef6 | ||

|

|

1c7347d38d | ||

|

|

7f58c422f2 | ||

|

|

0a0e2b5e93 | ||

|

|

de00c424f4 | ||

|

|

a249e2028d | ||

|

|

68036eeccf | ||

|

|

cb0b0235f0 | ||

|

|

6ff6f7a28d | ||

|

|

0b628fb22d | ||

|

|

b4bb11320f | ||

|

|

c61938db23 | ||

|

|

acf9d5480c | ||

|

|

a1cb7fd778 | ||

|

|

c24543fea0 | ||

|

|

100e8ab00d | ||

|

|

38497b04ac | ||

|

|

7bd27b920a | ||

|

|

efab11720d | ||

|

|

121f0120f0 | ||

|

|

515b85bb2f | ||

|

|

f27e41d19c | ||

|

|

603d451fc9 | ||

|

|

89adaabb64 | ||

|

|

987ca68ca6 | ||

|

|

71defbf2f9 | ||

|

|

5c35b42844 | ||

|

|

904b37c4be | ||

|

|

4e252f8243 | ||

|

|

dc3e52a900 | ||

|

|

06ad5f6652 | ||

|

|

c3b5474cbf | ||

|

|

69e3b830ed | ||

|

|

96a5891ce7 | ||

|

|

66b9245b28 | ||

|

|

f38ec68695 | ||

|

|

996772a27d | ||

|

|

7f4e9c1ad4 | ||

|

|

218ba69501 | ||

|

|

c2e5dfd933 | ||

|

|

3e40bbc603 | ||

|

|

3498d4b9c5 | ||

|

|

f4b838cad8 | ||

|

|

86fa8634ee | ||

|

|

8882006700 | ||

|

|

40fdf99a55 | ||

|

|

0257736c64 | ||

|

|

2024cda560 | ||

|

|

03aaf4ad76 | ||

|

|

550b88861f | ||

|

|

02ae5fa007 | ||

|

|

d81f105ed7 | ||

|

|

d3ed225675 | ||

|

|

efcca61f5a | ||

|

|

4dad0002cd | ||

|

|

9ffc83f0f6 | ||

|

|

981c7d5974 | ||

|

|

5da089ccd7 | ||

|

|

91e00f7d97 | ||

|

|

3a675fb541 | ||

|

|

9a5d8d2d22 | ||

|

|

de812221ef | ||

|

|

340980bdd0 | ||

|

|

f68a28fa2b | ||

|

|

7b7798e8c4 | ||

|

|

b3ac94115e | ||

|

|

b1a172cad9 | ||

|

|

f2e21c68d0 | ||

|

|

8b784c0eb1 | ||

|

|

bc59f32b96 | ||

|

|

a4fa8a4fae | ||

|

|

f730192c98 | ||

|

|

f994501296 | ||

|

|

9c3e73606c | ||

|

|

5619e16b70 | ||

|

|

d2e3867893 | ||

|

|

979f5475c3 | ||

|

|

5a10f2dd7c | ||

|

|

a80b5b7dd0 | ||

|

|

392967d664 | ||

|

|

d4dd1e37ce | ||

|

|

a8dfa95126 | ||

|

|

3b3c2b7141 | ||

|

|

f55c3c0887 | ||

|

|

f423ad77f3 | ||

|

|

8ba1e1ba9e | ||

|

|

55576084fc | ||

|

|

03311b06c9 | ||

|

|

b5c3d01834 | ||

|

|

f398ecbe39 | ||

|

|

8f1ae0f099 | ||

|

|

c8bee57732 | ||

|

|

85641794c3 | ||

|

|

849decaa59 | ||

|

|

6e88550f92 | ||

|

|

7c52483887 | ||

|

|

0aa520c030 | ||

|

|

548999f163 | ||

|

|

63df547306 | ||

|

|

547d2ca308 | ||

|

|

46b995f9e3 | ||

|

|

4f109c1a94 | ||

|

|

1fdf704cb4 | ||

|

|

5ec9c4c750 | ||

|

|

28cec99699 | ||

|

|

3e194c7906 | ||

|

|

afed94cc0e | ||

|

|

6f48c5ace0 | ||

|

|

9a7e1c2b5a | ||

|

|

2ef7539d55 | ||

|

|

4e52542e33 | ||

|

|

a1895ad924 | ||

|

|

d5a2c96887 | ||

|

|

3f30fe3113 | ||

|

|

d89f24a1a3 | ||

|

|

413354ff29 | ||

|

|

a28ba5bebb | ||

|

|

b93375b671 | ||

|

|

f39005b72d | ||

|

|

b568a33581 | ||

|

|

b05ef8edac | ||

|

|

015f9b663f | ||

|

|

b6167257c9 | ||

|

|

687fe044b2 | ||

|

|

cfa47eb873 | ||

|

|

7079000ee0 | ||

|

|

f60c4f39ee | ||

|

|

473713219f | ||

|

|

03ed81dc3f | ||

|

|

53543ccf26 | ||

|

|

3b183933e3 | ||

|

|

6c6fde8e2e | ||

|

|

61e23b6b81 | ||

|

|

6c649debc9 | ||

|

|

87b0683f77 | ||

|

|

59c1e7a18a | ||

|

|

4f9dad5dd3 | ||

|

|

adc6a0054c | ||

|

|

5425cca47e | ||

|

|

8a70cdb48b | ||

|

|

bb4bc11383 | ||

|

|

a366494c34 | ||

|

|

99de302ec9 | ||

|

|

907912046f | ||

|

|

2c0d379dc5 | ||

|

|

5b8efeb2ba | ||

|

|

f1c93fa337 | ||

|

|

a94a29a6ac | ||

|

|

7e3d736ee1 | ||

|

|

437534556e | ||

|

|

ce4b9c98dc | ||

|

|

c134078d60 | ||

|

|

a8bc6aff2e | ||

|

|

0b627017e0 | ||

|

|

eb3be80286 | ||

|

|

1fda71e4fa | ||

|

|

a02bd4beff | ||

|

|

d7f3ee16aa | ||

|

|

87e3c91c26 | ||

|

|

33a38e6fde | ||

|

|

3d8f45db43 | ||

|

|

40df25dcf0 | ||

|

|

5de151a966 | ||

|

|

115d0681a7 | ||

|

|

1c403a6d60 | ||

|

|

e67ba60863 | ||

|

|

0c0ec7be58 | ||

|

|

a72b3689b0 | ||

|

|

c4c76e0945 | ||

|

|

1a793e0b7e | ||

|

|

d0562ddbd9 | ||

|

|

3851a48ea0 | ||

|

|

40dcf86846 | ||

|

|

257e104d2b | ||

|

|

3f2a9b6973 | ||

|

|

ed365c35e7 | ||

|

|

24ff70759a | ||

|

|

c55c38f77b | ||

|

|

934bc15fae | ||

|

|

c2c994bfbb | ||

|

|

b1c2ffba6e | ||

|

|

b4a56052c5 | ||

|

|

69d15df221 | ||

|

|

e5752755d1 | ||

|

|

d98cfe0fc7 | ||

|

|

1a1955c1c2 | ||

|

|

0303dbc1d2 | ||

|

|

acee742822 | ||

|

|

8d792fbd62 | ||

|

|

d132a51a4d | ||

|

|

2111115a73 | ||

|

|

160c9caee3 | ||

|

|

33de788453 | ||

|

|

f86f5657d9 | ||

|

|

e02a92a0d0 | ||

|

|

5ae9605e77 | ||

|

|

88fbec1e53 | ||

|

|

d098e7b9e6 | ||

|

|

a8930e8060 | ||

|

|

e26501261e | ||

|

|

89bc11ce0f | ||

|

|

4b096962a9 | ||

|

|

c64fdf9aa3 | ||

|

|

9caaaa6498 | ||

|

|

105a7a4c74 | ||

|

|

09782e5b47 | ||

|

|

8d75b570c8 | ||

|

|

21121f9827 | ||

|

|

8e87e76dcf | ||

|

|

2629f3d865 | ||

|

|

8e5cd90707 | ||

|

|

9ffa810054 | ||

|

|

db9562e843 | ||

|

|

3540075b61 | ||

|

|

d0ba061f7a | ||

|

|

871ae5d7d2 | ||

|

|

633ebe5e8d | ||

|

|

1b7cc830ca | ||

|

|

d48193fd0e | ||

|

|

bb69f39976 | ||

|

|

f059db54d0 | ||

|

|

e4e8abb1b9 | ||

|

|

1a207f4d88 | ||

|

|

25d6e0bbd0 | ||

|

|

8e5323023a | ||

|

|

6d9805109a | ||

|

|

1822d56efb | ||

|

|

1e3766e2f1 | ||

|

|

718dcb69be | ||

|

|

372b1c7bbc | ||

|

|

9ba5c13702 | ||

|

|

30e241babe | ||

|

|

956b170674 | ||

|

|

2c52753adb | ||

|

|

095d59c01b | ||

|

|

1a2f145b28 | ||

|

|

930473a980 | ||

|

|

1db8990271 | ||

|

|

025fd03310 | ||

|

|

e468c59dfc | ||

|

|

340ef866d2 | ||

|

|

533bd36572 | ||

|

|

5bf29e6ac1 | ||

|

|

d6c3c58f42 | ||

|

|

b050cb9864 | ||

|

|

e176724775 | ||

|

|

8f9ed9e0df | ||

|

|

003eecf131 | ||

|

|

180b9fc8d2 | ||

|

|

5d3491c801 | ||

|

|

c45684b986 | ||

|

|

5c886d2f4e | ||

|

|

9f39af46aa | ||

|

|

7cda9f063f | ||

|

|

5e7583c5e6 | ||

|

|

a1fb962215 | ||

|

|

57d849a51b | ||

|

|

3000da6b88 | ||

|

|

db75cbbcb0 | ||

|

|

22acbb6b57 | ||

|

|

31cb0f7db1 | ||

|

|

6d17b9f504 | ||

|

|

0f337971ff | ||

|

|

6cf2775e7e | ||

|

|

dabf9104ed | ||

|

|

952ddb18fd | ||

|

|

34d990a800 | ||

|

|

020cb21b35 | ||

|

|

525364ba65 | ||

|

|

731fabef58 | ||

|

|

c10be77a1b | ||

|

|

a8bc4e3f37 | ||

|

|

815572f200 | ||

|

|

23fc54f2cf | ||

|

|

11407973b1 | ||

|

|

b9867e3fe0 | ||

|

|

3814c3294f | ||

|

|

9c44b5e546 | ||

|

|

cd635ec813 | ||

|

|

03831149f8 | ||

|

|

d8fd023cdb | ||

|

|

521120a448 | ||

|

|

ec8d298c36 | ||

|

|

db2759b7d1 | ||

|

|

3c3b9575a2 | ||

|

|

03580cbf39 | ||

|

|

2b009c71c1 | ||

|

|

b903cf3888 | ||

|

|

987cfd5dae | ||

|

|

1537fb39c0 | ||

|

|

65cbc478b8 | ||

|

|

e2e8fbe313 | ||

|

|

cf239dd6b2 | ||

|

|

a0723f60d2 | ||

|

|

da8e496430 | ||

|

|

722134e474 | ||

|

|

cb1a11e551 | ||

|

|

8984509f58 | ||

|

|

0f0d43b253 | ||

|

|

0f6956572e | ||

|

|

29892dc694 | ||

|

|

14265f3de8 | ||

|

|

0863bffdd2 | ||

|

|

3b748a30cc | ||

|

|

5619175108 | ||

|

|

6e9c024b3c | ||

|

|

8cd4ae1e34 | ||

|

|

689856b186 | ||

|

|

7b645303d6 | ||

|

|

408381bddb | ||

|

|

380cdab6fc | ||

|

|

03b7a8d639 | ||

|

|

bf6a61fa2d | ||

|

|

1de47072f8 | ||

|

|

c0c46b7cf5 | ||

|

|

42a91af7ac | ||

|

|

6e1ee638ff | ||

|

|

61c8afa088 | ||

|

|

c873a14127 | ||

|

|

06cce79806 | ||

|

|

0927c5df57 | ||

|

|

e691d2c782 | ||

|

|

67510adb9e | ||

|

|

490d553dfc | ||

|

|

70aab7568e | ||

|

|

f82aba3e26 | ||

|

|

f80940efdc | ||

|

|

6f875398c0 | ||

|

|

7a582afbdc | ||

|

|

38cd376228 | ||

|

|

74bcec45f1 | ||

|

|

9700b3251f | ||

|

|

88b8d50cd5 | ||

|

|

55b0191050 | ||

|

|

33c97fb318 | ||

|

|

23d33ad5a8 | ||

|

|

bd6c98047a | ||

|

|

73d6a29ae1 | ||

|

|

173e39c859 | ||

|

|

c0745c5cde | ||

|

|

1a6f93327e | ||

|

|

3c68a53170 | ||

|

|

e38c27ed67 | ||

|

|

8eaf8bbbde | ||

|

|

e015c7dbca | ||

|

|

58452abcdf | ||

|

|

2cbf0da137 | ||

|

|

f295b8cd91 | ||

|

|

97a492b891 | ||

|

|

aabcd10539 | ||

|

|

ee607dc3cc | ||

|

|

1265302a8e | ||

|

|

b5acf56e20 | ||

|

|

9752313d24 | ||

|

|

fe4a418af4 | ||

|

|

e5f03e8526 | ||

|

|

fb60c4a150 | ||

|

|

6b82284a41 | ||

|

|

192f67cd41 | ||

|

|

fd203abd47 | ||

|

|

6b65f0fc74 | ||

|

|

856b3b62f2 | ||

|

|

0372a2150d | ||

|

|

f3322c0577 | ||

|

|

c2bcc4e086 | ||

|

|

6e79c48640 | ||

|

|

d7dfa95e1b | ||

|

|

cf1cc24e33 | ||

|

|

6824a5650f | ||

|

|

73570cc8b5 | ||

|

|

959dcb9980 | ||

|

|

8f28666916 | ||

|

|

3eaa5a626c | ||

|

|

8c79056a94 | ||

|

|

ed076dc23e | ||

|

|

be2286c11c | ||

|

|

0e24c3d300 | ||

|

|

e1d8df6580 | ||

|

|

04a08a7d69 | ||

|

|

3c0c8aa01f | ||

|

|

026b278357 | ||

|

|

4121509ceb | ||

|

|

00ac61f0a4 | ||

|

|

4bb0dbb2f7 | ||

|

|

13b6df74af | ||

|

|

5c025bf865 | ||

|

|

20fc9eaf84 | ||

|

|

22a0479fab | ||

|

|

3510d5617d | ||

|

|

236d627fbd | ||

|

|

99739eada0 | ||

|

|

7bfef57894 | ||

|

|

d9dfe15253 | ||

|

|

3fe8aaa719 | ||

|

|

78a8fac6af | ||

|

|

6986e7758f | ||

|

|

b4a9df76b8 | ||

|

|

d9d958356a | ||

|

|

96f954a4e2 | ||

|

|

44585e1c15 | ||

|

|

c737ff4180 | ||

|

|

025279009d | ||

|

|

a9dc13d567 | ||

|

|

c3ed01c9b5 | ||

|

|

bd0b4a521e | ||

|

|

800a0ace71 | ||

|

|

db97869472 | ||

|

|

f681fcf154 | ||

|

|

db1b5956fc | ||

|

|

bdb07061ed | ||

|

|

428b917579 | ||

|

|

469f959e96 | ||

|

|

b68e189d97 | ||

|

|

028ef22878 | ||

|

|

80dacc015a | ||

|

|

0194c39bd5 | ||

|

|

f53ca24bb0 | ||

|

|

ae46a877d3 | ||

|

|

400939faf6 | ||

|

|

fd0205aafd | ||

|

|

e367a8ce24 | ||

|

|

096e2a41e9 | ||

|

|

e010f08143 | ||

|

|

3d2483ca37 | ||

|

|

535dd23509 | ||

|

|

4336a99c6a | ||

|

|

4cd5f93cdf | ||

|

|

67955779b0 | ||

|

|

26c34b484a | ||

|

|

4021613059 | ||

|

|

e891bf8411 | ||

|

|

f7798d1aac | ||

|

|

d11f00261b | ||

|

|

22cd12f37b | ||

|

|

db2fb12837 | ||

|

|

e808e595eb | ||

|

|

ce6742c676 | ||

|

|

cf3dc584d0 | ||

|

|

62f3603588 | ||

|

|

9fd4aa93e9 | ||

|

|

5bc3d93545 | ||

|

|

c28a6b89f0 | ||

|

|

1233613bea | ||

|

|

0206e0886c | ||

|

|

f6d135fbad | ||

|

|

f7da314dcf | ||

|

|

e6ce5e88f7 | ||

|

|

e5e6418be8 | ||

|

|

6507b53bbb | ||

|

|

2eafd89412 | ||

|

|

0f59d4952b | ||

|

|

7225bd2f55 | ||

|

|

deb2b80352 | ||

|

|

ad9dee92be | ||

|

|

f36bc16ca7 | ||

|

|

bda5f0ed4a | ||

|

|

cbe1c97a82 | ||

|

|

81fcbdd104 | ||

|

|

1a9294b58f | ||

|

|

310c01aac2 | ||

|

|

229303c1f8 | ||

|

|

fc075bc6b7 | ||

|

|

d04f0257c2 | ||

|

|

d11d356803 | ||

|

|

c54750ef8b | ||

|

|

510ef5196b | ||

|

|

04e46f9f5b | ||

|

|

6c0a5028c0 | ||

|

|

791bbeeb39 | ||

|

|

a5b8f1b7f7 | ||

|

|

af267ff706 | ||

|

|

46cc022590 | ||

|

|

f77c65411d | ||

|

|

1052e13af8 | ||

|

|

11e1502b12 | ||

|

|

02afc45a15 | ||

|

|

3e1cfe0d08 | ||

|

|

a3c5f785e9 | ||

|

|

d20df7d73e | ||

|

|

a8c61daeaf | ||

|

|

1a4f11209a | ||

|

|

04403aaf70 | ||

|

|

7f0dd7d0d7 | ||

|

|

cd29ad883e | ||

|

|

e1cd719a17 | ||

|

|

15bb331a7d | ||

|

|

6f3179bb8d | ||

|

|

29e5b87207 | ||

|

|

4403bc2d18 | ||

|

|

63e92e0897 | ||

|

|

aa4d8b1f47 | ||

|

|

9054ca18be | ||

|

|

38291d123f | ||

|

|

ca64ff2c0b | ||

|

|

dc85f49961 | ||

|

|

5dca4dac81 | ||

|

|

df8775d4c9 | ||

|

|

2bc663dcd5 | ||

|

|

1071bb8230 | ||

|

|

e437810eca | ||

|

|

e8fd34d31f | ||

|

|

6aebb8352e | ||

|

|

d684e0efc0 | ||

|

|

64ac6a8891 | ||

|

|

72e8180c6b | ||

|

|

d62c275004 | ||

|

|

aa7f562761 | ||

|

|

a1f033e4c1 | ||

|

|

58ddc31db6 | ||

|

|

5bf62481d5 | ||

|

|

6ff3f3f044 | ||

|

|

640f535e99 | ||

|

|

05d1a974eb | ||

|

|

99e38d81b1 | ||

|

|

ed7b384e24 | ||

|

|

5439ea1010 | ||

|

|

b719982504 | ||

|

|

8281d3fa55 | ||

|

|

9ba65a572e | ||

|

|

afddcf7f3b | ||

|

|

294569f5c9 | ||

|

|

ef6452cf55 | ||

|

|

9af40eba10 | ||

|

|

1b3a13ca19 | ||

|

|

71cc607de6 | ||

|

|

2ebd8345df | ||

|

|

f5baeb31c1 | ||

|

|

5abda44bc6 | ||

|

|

520d070081 | ||

|

|

86beba6f5a | ||

|

|

f0d9948aee | ||

|

|

8e3d2f7010 | ||

|

|

fc1c5a505d | ||

|

|

18cb06fbc7 | ||

|

|

1af785a94f | ||

|

|

7626becb38 | ||

|

|

5d5e959729 | ||

|

|

49bbdd064e | ||

|

|

9279ee2e76 | ||

|

|

a76e6b32f7 | ||

|

|

4c6f8c4f60 | ||

|

|

826d32413b | ||

|

|

b6799d9fcb | ||

|

|

8782304e8d | ||

|

|

9c55d46bc6 | ||

|

|

099db33e44 | ||

|

|

5c57df4669 | ||

|

|

152431a7d7 | ||

|

|

36fa5dc633 | ||

|

|

814f4aed15 | ||

|

|

e990856629 | ||

|

|

c97afbfa0b | ||

|

|

93b3e0302a | ||

|

|

27c87de4ed | ||

|

|

028ad4ceb9 | ||

|

|

e501642b8e | ||

|

|

7877215d59 | ||

|

|

e4347792b8 | ||

|

|

50fde60899 | ||

|

|

38f5e293b0 | ||

|

|

b6b399a590 | ||

|

|

b83841d253 | ||

|

|

3e69304f0f | ||

|

|

fe8131f743 | ||

|

|

9ef14a20d1 | ||

|

|

5897b97065 | ||

|

|

7966f010a2 | ||

|

|

b22f74cb59 | ||

|

|

c928948b15 | ||

|

|

606eaad8f7 | ||

|

|

c44281f62d | ||

|

|

1e98784eee | ||

|

|

dd9296ffc2 | ||

|

|

fc0e6b6efb | ||

|

|

68f5fbf65c | ||

|

|

9727e4084f | ||

|

|

5c2f48e94c | ||

|

|

cb098df743 | ||

|

|

b3c54ed07a | ||

|

|

c601eca25d | ||

|

|

48a13255f3 | ||

|

|

08f93c7d58 | ||

|

|

e5c9752681 | ||

|

|

afa1ed1eff | ||

|

|

072cbe62de | ||

|

|

9fe8bfadf3 | ||

|

|

75e4953070 | ||

|

|

de30650dc7 | ||

|

|

690c34bc1d | ||

|

|

4d2e32ee40 | ||

|

|

02b2988beb | ||

|

|

3f1a5af88b | ||

|

|

850fd85d4d | ||

|

|

24acd42589 | ||

|

|

eaa0dea63b | ||

|

|

dd50bbca9b | ||

|

|

f3f5471ef7 | ||

|

|

516c8ea66c | ||

|

|

48310034e5 | ||

|

|

be35a88f8c | ||

|

|

e67b512499 | ||

|

|

0cf59159cd | ||

|

|

e7a929a947 | ||

|

|

dabf4d4383 | ||

|

|

13bdd4ad0b | ||

|

|

3281b97ea9 | ||

|

|

8070db96e9 | ||

|

|

82c80a9682 | ||

|

|

136cc2e3ff | ||

|

|

eefce62f01 | ||

|

|

240b2c63f6 | ||

|

|

355da03fba | ||

|

|

55d57c552d | ||

|

|

a56e5eb2fe | ||

|

|

e7817fab78 | ||

|

|

714b8417f4 | ||

|

|

ffb68c8848 | ||

|

|

7a5be0ccbf | ||

|

|

c2927af554 | ||

|

|

24cea0cf22 | ||

|

|

f4351c119f | ||

|

|

f40b6b5b65 | ||

|

|

bff96eb1ae | ||

|

|

de9564c4c9 | ||

|

|

614aa1e49e | ||

|

|

b368c299d9 | ||

|

|

01a61d4e62 | ||

|

|

174fcd7167 | ||

|

|

dca85f2ffb | ||

|

|

8ad5acb020 | ||

|

|

287118c3a7 | ||

|

|

78621a1f50 | ||

|

|

116859e0ba | ||

|

|

c4827e908c | ||

|

|

41d56a867a | ||

|

|

9f4dd1d172 | ||

|

|

948d23f56d | ||

|

|

50e9a3ec8a | ||

|

|

0dbd6be010 | ||

|

|

2b4189b1a4 | ||

|

|

4bf81975dc | ||

|

|

ea040f4412 | ||

|

|

c246648949 | ||

|

|

125aaa5b7d | ||

|

|

aa7888c37d | ||

|

|

f1e1232849 | ||

|

|

bb7c7bcff6 | ||

|

|

e5cf35aff8 | ||

|

|

65585e286d | ||

|

|

99bcfb8c4b | ||

|

|

d98fd74968 | ||

|

|

7875185e1f | ||

|

|

a8d50955ee | ||

|

|

bfd5329363 | ||

|

|

ea1eb48596 | ||

|

|

f1bb23ba2a | ||

|

|

5160eff294 | ||

|

|

118984dfff | ||

|

|

87214fef70 | ||

|

|

f1f9626b5b | ||

|

|

3a13c93022 | ||

|

|

83bd66db98 | ||

|

|

13175b4e6c | ||

|

|

ecefbf2166 | ||

|

|

a763dda068 | ||

|

|

698b2bf988 | ||

|

|

a71cc759f6 | ||

|

|

802d304579 | ||

|

|

faf8da1365 | ||

|

|

ce546e8a90 | ||

|

|

f4731eecdb | ||

|

|

6704377402 | ||

|

|

827cb00837 | ||

|

|

299a342a62 | ||

|

|

8614d63ace | ||

|

|

77f04d10c7 | ||

|

|

6d8c978d17 | ||

|

|

d55994b66a | ||

|

|

ff4f2ae0b6 | ||

|

|

0b00f15811 | ||

|

|

bed5218550 | ||

|

|

86b67a9a7b | ||

|

|

73370de1f9 | ||

|

|

524aba0964 | ||

|

|

64528d8e0e | ||

|

|

31b5faa729 | ||

|

|

17977a2fff | ||

|

|

3e007eeaae | ||

|

|

a96b209e1b | ||

|

|

05b897f43e | ||

|

|

738dcac60d | ||

|

|

b3bbeee5e2 | ||

|

|

782eae4d4c | ||

|

|

f2f5e212f5 | ||

|

|

ff7102468e | ||

|

|

118cb1017a | ||

|

|

360bb6f306 | ||

|

|

d8e314db1a | ||

|

|

fd14c51f85 | ||

|

|

57a5a9baeb | ||

|

|

65c74c75c7 | ||

|

|

e82f3b3975 | ||

|

|

d7323213b8 | ||

|

|

fbc33da734 | ||

|

|

210815d4cf | ||

|

|

1e672ae349 | ||

|

|

19fabd0e64 | ||

|

|

ef392ef6ba | ||

|

|

046e658984 | ||

|

|

a46db9e0df | ||

|

|

17f3cc3ad8 | ||

|

|

3236a10cf5 | ||

|

|

a4eb6d5f1b | ||

|

|

a09661fc83 | ||

|

|

f52ab69a5b | ||

|

|

9d1b620dcf | ||

|

|

3ebd801b3d | ||

|

|

05181f1888 | ||

|

|

6875baf64c | ||

|

|

0cdb1e638d | ||

|

|

da415e5c6b | ||

|

|

c46a1c1e2f | ||

|

|

b79a1530fb | ||

|

|

a6a7ab45f8 | ||

|

|

c8f69ffe77 | ||

|

|

79982e0e8d | ||

|

|

bc937ed2db | ||

|

|

8ca028eb2e | ||

|

|

df17e6b75e | ||

|

|

f880e1834d | ||

|

|

4dd1b97e38 | ||

|

|

074e3fcd6e | ||

|

|

aeb433cc39 | ||

|

|

eb9d360c0a | ||

|

|

abfad4e025 | ||

|

|

3f40fada1b | ||

|

|

a9871d05b2 | ||

|

|

39e46d2e0b | ||

|

|

e9091cbb8c | ||

|

|

cb340d78e1 | ||

|

|

996b2db514 | ||

|

|

548d7b9833 | ||

|

|

96dbbf4db6 | ||

|

|

4f14462af7 | ||

|

|

1e08b4ece6 | ||

|

|

177ebe26de | ||

|

|

6fd9efc30a | ||

|

|

da72184fda | ||

|

|

6f212a41d8 | ||

|

|

52314d1a35 | ||

|

|

3028a18a37 | ||

|

|

a2b31cb28d | ||

|

|

26a5fcf989 | ||

|

|

509086ef54 | ||

|

|

963510ed22 | ||

|

|

2c0f8cda50 | ||

|

|

50d2671d75 | ||

|

|

b73d879f3c | ||

|

|

725a5fe5b9 | ||

|

|

65ca42ca42 | ||

|

|

b22ff59f7b | ||

|

|

58527857d9 | ||

|

|

6306c4555c | ||

|

|

922603f906 | ||

|

|

f8d45de749 | ||

|

|

b6760e19b7 | ||

|

|

6ce25f38e1 | ||

|

|

5cb7f726bc | ||

|

|

a334f33b35 | ||

|

|

cb1602c2de | ||

|

|

b503271aba | ||

|

|

008e5651f8 | ||

|

|

5e3aab12a7 | ||

|

|

8026b6c874 | ||

|

|

51b80f6fa1 | ||

|

|

75fdeb2843 | ||

|

|

2b1d927de4 | ||

|

|

e5d788497a | ||

|

|

b173e2ef86 | ||

|

|

042676fff7 | ||

|

|

44d53146af | ||

|

|

3d48c2427a | ||

|

|

a9046d8f35 | ||

|

|

b4a1b81aec | ||

|

|

90eb0ea27a | ||

|

|

4f01b9fd25 | ||

|

|

174b5c8f7f | ||

|

|

3912fcb238 | ||

|

|

ef70457a48 | ||

|

|

8c4dbaec4f | ||

|

|

645e8f426c | ||

|

|

bae1d1c047 | ||

|

|

ce4fb069d5 | ||

|

|

9444000d46 | ||

|

|

eacd9ac240 | ||

|

|

cf38d6ca69 | ||

|

|

905993d66e | ||

|

|

b8656763ec | ||

|

|

0d7fe2e347 | ||

|

|

33bd871a63 | ||

|

|

772122b255 | ||

|

|

9fb346751c | ||

|

|

10e560c5b2 | ||

|

|

cb058e91a3 | ||

|

|

ba9f2bc376 | ||

|

|

fb7e234120 | ||

|

|

27e7407407 | ||

|

|

623397d20a | ||

|

|

7d46de33d8 | ||

|

|

8c80cecdfb | ||

|

|

5470b51cc7 | ||

|

|

8e0b1d8aee | ||

|

|

e53f431273 | ||

|

|

8e0ee67108 | ||

|

|

3d82d9af1b | ||

|

|

8a0bd23985 | ||

|

|

2834459b22 | ||

|

|

000894dabd | ||

|

|

494620cdea | ||

|

|

a502eb239d | ||

|

|

caf775093e | ||

|

|

4387e4764f | ||

|

|

3b8e17c21f | ||

|

|

f28e18e676 | ||

|

|

b4bab1d5b9 | ||

|

|

c4d5072e5c | ||

|

|

852bf750ca | ||

|

|

47359c4113 | ||

|

|

15f2c4c769 | ||

|

|

e74af0db89 | ||

|

|

a0174c61e8 | ||

|

|

5b30dce609 | ||

|

|

8f6099e3e4 | ||

|

|

7c44375223 | ||

|

|

72e204f8fd | ||

|

|

b5f5b53e37 | ||

|

|

1c15133a52 | ||

|

|

7c9c2c35f8 | ||

|

|

5ff62d8f22 | ||

|

|

9806e568c0 | ||

|

|

b4bb4e2938 | ||

|

|

4427173a6c | ||

|

|

db66fe33fa | ||

|

|

de7b809229 | ||

|

|

cf5fa96a93 | ||

|

|

a40df1ff87 | ||

|

|

a161aa2c92 | ||

|

|

cad0f25345 | ||

|

|

2ed453a400 | ||

|

|

452d8a686f | ||

|

|

9e76b6ee70 | ||

|

|

45e97b3753 | ||

|

|

ecc16c69e6 | ||

|

|

90f77f6d5c | ||

|

|

0c11cf747a | ||

|

|

6d36475ed3 | ||

|

|

fee6ff43bf | ||

|

|

57cd5ec818 | ||

|

|

02512e0f4f | ||

|

|

555f4a8a6d | ||

|

|

3633766544 | ||

|

|

e98a984417 | ||

|

|

bc9141753f | ||

|

|

1f9f4157a6 | ||

|

|

778a3ed551 | ||

|

|

5ea4305185 | ||

|

|

ef311f22bf | ||

|

|

e202530afb | ||

|

|

85deeaf806 | ||

|

|

825c8a6abe | ||

|

|

cdc8f63b4b | ||

|

|

9db9818ede | ||

|

|

4f7ee669d3 | ||

|

|

77f9947613 | ||

|

|

a8eb3b6ac5 | ||

|

|

575eab1cf0 | ||

|

|

7a23e4fd4e | ||

|

|

77e6124b00 | ||

|

|

b16b276f36 | ||

|

|

a3ddb58566 | ||

|

|

be7252f620 | ||

|

|

4f380debb5 | ||

|

|

f34d3620b1 | ||

|

|

70e99447f9 | ||

|

|

db8af3d1e0 | ||

|

|

7f70b0f703 | ||

|

|

2e10cc8e79 | ||

|

|

047d9143c0 | ||

|

|

047c4aa3a0 | ||

|

|

925b220905 | ||

|

|

6708059227 | ||

|

|

1f3d9d4e1c | ||

|

|

0dcfac8f15 | ||

|

|

ad8b7f0894 | ||

|

|

55f810b23f | ||

|

|

65eddee63e | ||

|

|

a7a0eef125 | ||

|

|

5d35af9d69 | ||

|

|

ea21bca7df | ||

|

|

a3c0737ba8 | ||

|

|

7b57b3392c | ||

|

|

492451bfee | ||

|

|

9747995510 | ||

|

|

ad9112010f | ||

|

|

a4ec2d1d86 | ||

|

|

b7f07951f6 | ||

|

|

0e3363e61c | ||

|

|

4322c98f73 | ||

|

|

edcf789126 | ||

|

|

b985ba4f0e | ||

|

|

67c0405274 | ||

|

|

9b32151ab5 | ||

|

|

a1e8077f45 | ||

|

|

956e4e2aa7 | ||

|

|

b51a659515 | ||

|

|

44a6f09a09 | ||

|

|

4c10525078 | ||

|

|

c9ab8b2eff | ||

|

|

4bf38bf00f | ||

|

|

7c7c67948e | ||

|

|

263cb96786 | ||

|

|

b6e3e7a658 | ||

|

|

ceaf1423f4 | ||

|

|

c2e0a275e1 | ||

|

|

c8620a066d | ||

|

|

9598b503ec | ||

|

|

1ca566f670 | ||

|

|

94f4ec8b96 | ||

|

|

7aab2c55ff | ||

|

|

6abb4d34c1 | ||

|

|

c8ccf080f3 | ||

|

|

0342ae926c | ||

|

|

be08742653 | ||

|

|

528f7da5ef | ||

|

|

7d72ae3449 | ||

|

|

753cde0b85 | ||

|

|

223ba44b61 | ||

|

|

cd02483b19 | ||

|

|

f724662874 | ||

|

|

bee762737e | ||

|

|

83efd3e506 | ||

|

|

2278a6cc73 | ||

|

|

586b60b276 | ||

|

|

f07b9ea304 | ||

|

|

09dca5d76c | ||

|

|

65bb808441 | ||

|

|

83b79edb42 | ||

|

|

b8ec244d92 | ||

|

|

5b924614aa | ||

|

|

43103add47 | ||

|

|

124d5d6bb2 | ||

|

|

58fde558f7 | ||

|

|

8b314acfcf | ||

|

|

1c0eab9893 | ||

|

|

514079fe96 | ||

|

|

c62daa0c59 | ||

|

|

1a05101f50 | ||

|

|

47fb46c837 | ||

|

|

d29580aa02 | ||

|

|

d0fc62ef13 | ||

|

|

b14c0e4c11 | ||

|

|

e26d5b8ba5 | ||

|

|

8987ebca36 | ||

|

|

d3cd21956a | ||

|

|

43ec12f4f0 | ||

|

|

40cf2c85e6 | ||

|

|

6195b7c334 | ||

|

|

b149da28c8 | ||

|

|

e5cb2dd00e | ||

|

|

c9b883dff5 | ||

|

|

ad43253a90 | ||

|

|

979de67c2b | ||

|

|

8416caf798 | ||

|

|

80d9dfe420 | ||

|

|

385570c1e8 | ||

|

|

d82cfc6c62 | ||

|

|

27e9210d52 | ||

|

|

fdf52dcb17 | ||

|

|

1ff220ccf8 | ||

|

|

8a49b50f33 | ||

|

|

1b6e5b7116 | ||

|

|

536ab34955 | ||

|

|

f7369f0611 | ||

|

|

14bc105d43 | ||

|

|

2efb4365bf | ||

|

|

c1b86fc782 | ||

|

|

52e92cc0db | ||

|

|

3af2f636a5 | ||

|

|

6fb967cf79 | ||

|

|

0dab215e27 | ||

|

|

03c49ea1f8 | ||

|

|

6ec136e63f | ||

|

|

bba5671eaf | ||

|

|

88d7593d89 | ||

|

|

bd23b80d45 | ||

|

|

f96e0c4071 | ||

|

|

cbd8e40f14 | ||

|

|

2d2c033ba7 | ||

|

|

496c68d2af | ||

|

|

c505943e8b | ||

|

|

2841c09c1f | ||

|

|

11700d7ecb | ||

|

|

18444bd284 | ||

|

|

9d3a89d362 | ||

|

|

33eb2c8801 | ||

|

|

2d0ab4a1b8 | ||

|

|

2fec7ccd58 | ||

|

|

a835419168 | ||

|

|

052959f435 | ||

|

|

4ce16d1ea4 | ||

|

|

c1c7167ace | ||

|

|

3d538d4f14 | ||

|

|

7969e7116d | ||

|

|

4f58f2caee | ||

|

|

263baa81c0 | ||

|

|

092890b6ab | ||

|

|

db7d7ea288 | ||

|

|

452daf5d5e | ||

|

|

d373164e13 | ||

|

|

cd7715fa0e | ||

|

|

af9c3a8565 | ||

|

|

dd6b8c44a4 | ||

|

|

499273dbb7 | ||

|

|

6612b892b7 | ||

|

|

89cea31475 | ||

|

|

872fa07213 | ||

|

|

36e4ee7738 | ||

|

|

a139eb9bce | ||

|

|

7166696aa2 | ||

|

|

537a7908f1 | ||

|

|

560df58bb4 | ||

|

|

5629d47cb6 | ||

|

|

3fe776ee69 | ||

|

|

37b4ff811d | ||

|

|

7384aab2f4 | ||

|

|

581be02e53 | ||

|

|

71db83efce | ||

|

|

7ae7f25580 | ||

|

|

4ce05d4d4d | ||

|

|

fdb56de0a8 | ||

|

|

df56d73cec | ||

|

|

4ba0e155f3 | ||

|

|

304655f7ff | ||

|

|

6210f06bc0 | ||

|

|

09ae37410e | ||

|

|

351c803623 | ||

|

|

6a027b70e7 | ||

|

|

5d14baa43a | ||

|

|

141b397c82 | ||

|

|

fd853cfc6f | ||

|

|

63f718178e | ||

|

|

cdd2adbc73 | ||

|

|

74baf20feb | ||

|

|

958112af6b | ||

|

|

08d0f9448e | ||

|

|

7bcc8bd3a2 | ||

|

|

0eb2545773 | ||

|

|

714511b0a8 | ||

|

|

cb6a5d4069 | ||

|

|

c9700773f4 | ||

|

|

2229f87d9b | ||

|

|

d360503443 | ||

|

|

838182a8b4 | ||

|

|

967cfedbb3 | ||

|

|

a36645a282 | ||

|

|

3368a70f88 | ||

|

|

cd1715ba52 | ||

|

|

0bc2a16093 | ||

|

|

a21b3cd606 | ||

|

|

1c479684fc | ||

|

|

c9dbc7c7b7 | ||

|

|

c41dc9d8c0 | ||

|

|

1db5841424 | ||

|

|

e53b068902 | ||

|

|

2bd436dfd8 | ||

|

|

d13be25f45 | ||

|

|

6efd9dc5f9 | ||

|

|

f13530d8a1 | ||

|

|

1edd4012e4 | ||

|

|

4390c9855a | ||

|

|

4d53216c05 | ||

|

|

8a86fa491e | ||

|

|

040206859f | ||

|

|

d06119a21d | ||

|

|

c27ad97287 | ||

|

|

b1658c0f83 | ||

|

|

3e6a241c69 | ||

|

|

05b8609073 | ||

|

|

552f09f48a | ||

|

|

97df5c3b9c | ||

|

|

8d9102aa08 | ||

|

|

160dceff3e | ||

|

|

33e5ad2b5c | ||

|

|

998cb642a9 | ||

|

|

07ac195fea | ||

|

|

7d5990bf0f | ||

|

|

4ec982163e | ||

|

|

3c9502f241 | ||

|

|

63cecb2fd8 | ||

|

|

3029a2d33d | ||

|

|

fa0d2a959d | ||

|

|

0ece065cb0 | ||

|

|

f79cac3292 | ||

|

|

7a20a9941e | ||

|

|

24cc960379 | ||

|

|

353df6413f | ||

|

|

fb7e00c158 | ||

|

|

a0567beee5 | ||

|

|

b68eae16e5 | ||

|

|

9a812edee4 | ||

|

|

43d2a6e135 | ||

|

|

5839e22796 | ||

|

|

ee844c81d2 | ||

|

|

b6cb3b026c | ||

|

|

df33ebb2a0 | ||

|

|

d2a6838958 | ||

|

|

96b8054e6b | ||

|

|

dfdd2dadb4 | ||

|

|

d0528b7883 | ||

|

|

f40e682800 | ||

|

|

f4dc01d1ec | ||

|

|

187ddedf96 | ||

|

|

5613134fed | ||

|

|

e454ed4e39 | ||

|

|

1e2125653e | ||

|

|

835a726d2a | ||

|

|

0539cc6d8c | ||

|

|

549ff7d100 | ||

|

|

456b528785 | ||

|

|

003a6342a5 | ||

|

|

fb10764167 | ||

|

|

9e1554f5c7 | ||

|

|

42c82be8f5 | ||

|

|

76ec0e888b | ||

|

|

892c99fa23 | ||

|

|

28da482ef2 | ||

|

|

936f07336c | ||

|

|

224a59ab4b | ||

|

|

96c8e01a3b | ||

|

|

88bd7bff1e | ||

|

|

7075b9f0c0 | ||

|

|

b19666f7e0 | ||

|

|

e663f3db72 | ||

|

|

fd1ffdba80 | ||

|

|

a12538b3a8 | ||

|

|

cdff1ba37b | ||

|

|

f6a51f6b6f | ||

|

|

6c5ab7800e | ||

|

|

7e26a2ab98 | ||

|

|

4e6c398c8c | ||

|

|

d4e829465b | ||

|

|

1ade37312e | ||

|

|

372e381a85 | ||

|

|

374cc64601 | ||

|

|

1cf25572a3 | ||

|

|

ba45f70a30 | ||

|

|

5e56566de6 | ||

|

|

a2ccf7ef03 | ||

|

|

654dbf8198 | ||

|

|

53a5254897 | ||

|

|

45dd0611d9 | ||

|

|

e62069d3db | ||

|

|

5088636d5f | ||

|

|

003d70990e | ||

|

|

c433daf024 | ||

|

|

051d08b499 | ||

|

|

b980e7af29 | ||

|

|

4d0799dead | ||

|

|

d3dca1ddc2 | ||

|

|

9b8039440c | ||

|

|

eea5c9df2f | ||

|

|

aa9aff800c | ||

|

|

0b3b5e230b | ||

|

|

db0d91beb1 | ||

|

|

4758033445 | ||

|

|

1e48fb8cda | ||

|

|

1d8da117d6 | ||

|

|

635fa795d2 | ||

|

|

c1792df819 | ||

|

|

36944f8073 | ||

|

|

4d59cb0351 | ||

|

|

fd7269d455 | ||

|

|

b375e6a250 | ||

|

|

48589d20e2 | ||

|

|

be9cbcf5ac | ||

|

|

b04faddac4 | ||

|

|

e925187dda | ||

|

|

06f380a17a | ||

|

|

67882414e1 | ||

|

|

2b149fb8ea | ||

|

|

3166bd5df5 | ||

|

|

e911452d0c | ||

|

|

deac5ad2fe | ||

|

|

f097267bcd | ||

|

|

161130c116 | ||

|

|

a03b8f28ae | ||

|

|

6d3798ad08 | ||

|

|

70921b8d15 | ||

|

|

b185f83fc3 | ||

|

|

bb9ae02ccc | ||

|

|

60af295c0a | ||

|

|

e7fe52a625 | ||

|

|

49c506eed9 | ||

|

|

21fadf6df2 | ||

|

|

5fcccbc97d | ||

|

|

3ef2b6cfa2 | ||

|

|

84b4269c75 | ||

|

|

a0c09af67e | ||

|

|

a2d57d43d1 | ||

|

|

df33f1a130 | ||

|

|

4c6a2055c2 | ||

|

|

f09a3df870 | ||

|

|

ea1a412749 | ||

|

|

db82327d9a | ||

|

|

ea1a02bd7d | ||

|

|

1606658cb1 | ||

|

|

54ba66733e | ||

|

|

f6847e6f8c |

120

.drone.yml

120

.drone.yml

@@ -1,120 +0,0 @@

|

||||

---

|

||||

kind: pipeline

|

||||

name: integration-testing

|

||||

|

||||

platform:

|

||||

os: linux

|

||||

arch: amd64

|

||||

|

||||

clone:

|

||||

disable: true

|

||||

|

||||

steps:

|

||||

- name: prepare-tests

|

||||

pull: default

|

||||

image: timovibritannia/ansible

|

||||

commands:

|

||||

- git clone https://github.com/mailcow/mailcow-integration-tests.git --branch $(curl -sL https://api.github.com/repos/mailcow/mailcow-integration-tests/releases/latest | jq -r '.tag_name') --single-branch .

|

||||

- chmod +x ci.sh

|

||||

- chmod +x ci-ssh.sh

|

||||

- chmod +x ci-piprequierments.sh

|

||||

- ./ci.sh

|

||||

- wget -O group_vars/all/secrets.yml $SECRETS_DOWNLOAD_URL --quiet

|

||||

environment:

|

||||

SECRETS_DOWNLOAD_URL:

|

||||

from_secret: SECRETS_DOWNLOAD_URL

|

||||

VAULT_PW:

|

||||

from_secret: VAULT_PW

|

||||

when:

|

||||

branch:

|

||||

- master

|

||||

- staging

|

||||

event:

|

||||

- push

|

||||

|

||||

- name: lint

|

||||

pull: default

|

||||

image: timovibritannia/ansible

|

||||

commands:

|

||||

- ansible-lint ./

|

||||

when:

|

||||

branch:

|

||||

- master

|

||||

- staging

|

||||

event:

|

||||

- push

|

||||

|

||||

- name: create-server

|

||||

pull: default

|

||||

image: timovibritannia/ansible

|

||||

commands:

|

||||

- ./ci-piprequierments.sh

|

||||

- ansible-playbook mailcow-start-server.yml --diff

|

||||

- ./ci-ssh.sh

|

||||

environment:

|

||||

ANSIBLE_HOST_KEY_CHECKING: false

|

||||

ANSIBLE_FORCE_COLOR: true

|

||||

when:

|

||||

branch:

|

||||

- master

|

||||

- staging

|

||||

event:

|

||||

- push

|

||||

|

||||

- name: setup-server

|

||||

pull: default

|

||||

image: timovibritannia/ansible

|

||||

commands:

|

||||

- sleep 120

|

||||

- ./ci-piprequierments.sh

|

||||

- ansible-playbook mailcow-setup-server.yml --private-key /drone/src/id_ssh_rsa --diff

|

||||

environment:

|

||||

ANSIBLE_HOST_KEY_CHECKING: false

|

||||

ANSIBLE_FORCE_COLOR: true

|

||||

when:

|

||||

branch:

|

||||

- master

|

||||

- staging

|

||||

event:

|

||||

- push

|

||||

|

||||

- name: run-tests

|

||||

pull: default

|

||||

image: timovibritannia/ansible

|

||||

commands:

|

||||

- ./ci-piprequierments.sh

|

||||

- ansible-playbook mailcow-integration-tests.yml --private-key /drone/src/id_ssh_rsa --diff

|

||||

environment:

|

||||

ANSIBLE_HOST_KEY_CHECKING: false

|

||||

ANSIBLE_FORCE_COLOR: true

|

||||

when:

|

||||

branch:

|

||||

- master

|

||||

- staging

|

||||

event:

|

||||

- push

|

||||

|

||||

- name: delete-server

|

||||

pull: default

|

||||

image: timovibritannia/ansible

|

||||

commands:

|

||||

- ./ci-piprequierments.sh

|

||||

- ansible-playbook mailcow-delete-server.yml --diff

|

||||

environment:

|

||||

ANSIBLE_HOST_KEY_CHECKING: false

|

||||

ANSIBLE_FORCE_COLOR: true

|

||||

when:

|

||||

branch:

|

||||

- master

|

||||

- staging

|

||||

event:

|

||||

- push

|

||||

status:

|

||||

- failure

|

||||

- success

|

||||

|

||||

---

|

||||

kind: signature

|

||||

hmac: f6619243fe2a27563291c9f2a46d93ffbc3b6dced9a05f23e64b555ce03a31e5

|

||||

|

||||

...

|

||||

2

.github/FUNDING.yml

vendored

2

.github/FUNDING.yml

vendored

@@ -1 +1 @@

|

||||

custom: https://mailcow.github.io/mailcow-dockerized-docs/#help-mailcow

|

||||

custom: ["https://www.servercow.de/mailcow?lang=en#sal"]

|

||||

|

||||

175

.github/ISSUE_TEMPLATE/Bug_report.yml

vendored

175

.github/ISSUE_TEMPLATE/Bug_report.yml

vendored

@@ -7,8 +7,8 @@ body:

|

||||

label: Contribution guidelines

|

||||

description: Please read the contribution guidelines before proceeding.

|

||||

options:

|

||||

- label: I've read the [contribution guidelines](https://github.com/mailcow/mailcow-dockerized/blob/master/CONTRIBUTING.md) and wholeheartedly agree

|

||||

required: true

|

||||

- label: I've read the [contribution guidelines](https://github.com/mailcow/mailcow-dockerized/blob/master/CONTRIBUTING.md) and wholeheartedly agree

|

||||

required: true

|

||||

- type: checkboxes

|

||||

attributes:

|

||||

label: I've found a bug and checked that ...

|

||||

@@ -26,69 +26,142 @@ body:

|

||||

attributes:

|

||||

label: Description

|

||||

description: Please provide a brief description of the bug in 1-2 sentences. If applicable, add screenshots to help explain your problem. Very useful for bugs in mailcow UI.

|

||||

render: plain text

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Logs

|

||||

description: Please take a look at the [official documentation](https://mailcow.github.io/mailcow-dockerized-docs/debug-logs/) and post the last few lines of logs, when the error occurs. For example, docker container logs of affected containers. This will be automatically formatted into code, so no need for backticks.

|

||||

render: bash

|

||||

label: "Logs:"

|

||||

description: "Please take a look at the [official documentation](https://docs.mailcow.email/troubleshooting/debug-logs/) and post the last few lines of logs, when the error occurs. For example, docker container logs of affected containers. This will be automatically formatted into code, so no need for backticks."

|

||||

render: plain text

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Steps to reproduce

|

||||

description: Please describe the steps to reproduce the bug. Screenshots can be added, if helpful.

|

||||

label: "Steps to reproduce:"

|

||||

description: "Please describe the steps to reproduce the bug. Screenshots can be added, if helpful."

|

||||

render: plain text

|

||||

placeholder: |-

|

||||

1. ...

|

||||

2. ...

|

||||

3. ...

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

- type: markdown

|

||||

attributes:

|

||||

label: System information

|

||||

description: In this stage we would kindly ask you to attach general system information about your setup.

|

||||

value: |-

|

||||

| Question | Answer |

|

||||

| --- | --- |

|

||||

| My operating system | I_DO_REPLY_HERE |

|

||||

| Is Apparmor, SELinux or similar active? | I_DO_REPLY_HERE |

|

||||

| Virtualization technlogy (KVM, VMware, Xen, etc - **LXC and OpenVZ are not supported** | I_DO_REPLY_HERE |

|

||||

| Server/VM specifications (Memory, CPU Cores) | I_DO_REPLY_HERE |

|

||||

| Docker Version (`docker version`) | I_DO_REPLY_HERE |

|

||||

| Docker-Compose Version (`docker-compose version`) | I_DO_REPLY_HERE |

|

||||

| Reverse proxy (custom solution) | I_DO_REPLY_HERE |

|

||||

|

||||

Output of `git diff origin/master`, any other changes to the code? If so, **please post them**:

|

||||

```

|

||||

YOUR OUTPUT GOES HERE

|

||||

```

|

||||

|

||||

All third-party firewalls and custom iptables rules are unsupported. **Please check the Docker docs about how to use Docker with your own ruleset**. Nevertheless, iptabels output can help us to help you:

|

||||

iptables -L -vn:

|

||||

```

|

||||

YOUR OUTPUT GOES HERE

|

||||

```

|

||||

|

||||

ip6tables -L -vn:

|

||||

```

|

||||

YOUR OUTPUT GOES HERE

|

||||

```

|

||||

|

||||

iptables -L -vn -t nat:

|

||||

```

|

||||

YOUR OUTPUT GOES HERE

|

||||

```

|

||||

|

||||

ip6tables -L -vn -t nat:

|

||||

```

|

||||

YOUR OUTPUT GOES HERE

|

||||

```

|

||||

|

||||

DNS problems? Please run `docker exec -it $(docker ps -qf name=acme-mailcow) dig +short stackoverflow.com @172.22.1.254` (set the IP accordingly, if you changed the internal mailcow network) and post the output:

|

||||

```

|

||||

YOUR OUTPUT GOES HERE

|

||||

```

|

||||

value: |

|

||||

## System information

|

||||

### In this stage we would kindly ask you to attach general system information about your setup.

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: "Which branch are you using?"

|

||||

description: "#### `git rev-parse --abbrev-ref HEAD`"

|

||||

multiple: false

|

||||

options:

|

||||

- master

|

||||

- nightly

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: "Which architecture are you using?"

|

||||

description: "#### `uname -m`"

|

||||

multiple: false

|

||||

options:

|

||||

- x86

|

||||

- ARM64 (aarch64)

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: "Operating System:"

|

||||

placeholder: "e.g. Ubuntu 22.04 LTS"

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: "Server/VM specifications:"

|

||||

placeholder: "Memory, CPU Cores"

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: "Is Apparmor, SELinux or similar active?"

|

||||

placeholder: "yes/no"

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: "Virtualization technology:"

|

||||

placeholder: "KVM, VMware, Xen, etc - **LXC and OpenVZ are not supported**"

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: "Docker version:"

|

||||

description: "#### `docker version`"

|

||||

placeholder: "20.10.21"

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: "docker-compose version or docker compose version:"

|

||||

description: "#### `docker-compose version` or `docker compose version`"

|

||||

placeholder: "v2.12.2"

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: "mailcow version:"

|

||||

description: "#### ```git describe --tags `git rev-list --tags --max-count=1` ```"

|

||||

placeholder: "2022-08"

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: "Reverse proxy:"

|

||||

placeholder: "e.g. Nginx/Traefik"

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: "Logs of git diff:"

|

||||

description: "#### Output of `git diff origin/master`, any other changes to the code? If so, **please post them**:"

|

||||

render: plain text

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: "Logs of iptables -L -vn:"

|

||||

description: "#### Output of `iptables -L -vn`"

|

||||

render: plain text

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: "Logs of ip6tables -L -vn:"

|

||||

description: "#### Output of `ip6tables -L -vn`"

|

||||

render: plain text

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: "Logs of iptables -L -vn -t nat:"

|

||||

description: "#### Output of `iptables -L -vn -t nat`"

|

||||

render: plain text

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: "Logs of ip6tables -L -vn -t nat:"

|

||||

description: "#### Output of `ip6tables -L -vn -t nat`"

|

||||

render: plain text

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: "DNS check:"

|

||||

description: "#### Output of `docker exec -it $(docker ps -qf name=acme-mailcow) dig +short stackoverflow.com @172.22.1.254` (set the IP accordingly, if you changed the internal mailcow network)"

|

||||

render: plain text

|

||||

validations:

|

||||

required: true

|

||||

|

||||

9

.github/ISSUE_TEMPLATE/config.yml

vendored

9

.github/ISSUE_TEMPLATE/config.yml

vendored

@@ -1,8 +1,11 @@

|

||||

blank_issues_enabled: false

|

||||

contact_links:

|

||||

- name: ❓ Community-driven support

|

||||

url: https://mailcow.github.io/mailcow-dockerized-docs/#get-support

|

||||

- name: ❓ Community-driven support (Free)

|

||||

url: https://docs.mailcow.email/#get-support

|

||||

about: Please use the community forum for questions or assistance

|

||||

- name: 🔥 Premium Support (Paid)

|

||||

url: https://www.servercow.de/mailcow?lang=en#support

|

||||

about: Buy a support subscription for any critical issues and get assisted by the mailcow Team. See conditions!

|

||||

- name: 🚨 Report a security vulnerability

|

||||

url: https://www.servercow.de/anfrage?lang=en

|

||||

url: "mailto:info@servercow.de?subject=mailcow: dockerized Security Vulnerability"

|

||||

about: Please give us appropriate time to verify, respond and fix before disclosure.

|

||||

|

||||

13

.github/ISSUE_TEMPLATE/pr_to_nighty_template.yml

vendored

Normal file

13

.github/ISSUE_TEMPLATE/pr_to_nighty_template.yml

vendored

Normal file

@@ -0,0 +1,13 @@

|

||||

## :memo: Brief description

|

||||

<!-- Diff summary - START -->

|

||||

<!-- Diff summary - END -->

|

||||

|

||||

|

||||

## :computer: Commits

|

||||

<!-- Diff commits - START -->

|

||||

<!-- Diff commits - END -->

|

||||

|

||||

|

||||

## :file_folder: Modified files

|

||||

<!-- Diff files - START -->

|

||||

<!-- Diff files - END -->

|

||||

31

.github/renovate.json

vendored

Normal file

31

.github/renovate.json

vendored

Normal file

@@ -0,0 +1,31 @@

|

||||

{

|

||||

"enabled": true,

|

||||

"timezone": "Europe/Berlin",

|

||||

"dependencyDashboard": true,

|

||||

"dependencyDashboardTitle": "Renovate Dashboard",

|

||||

"commitBody": "Signed-off-by: milkmaker <milkmaker@mailcow.de>",

|

||||

"rebaseWhen": "auto",

|

||||

"labels": ["renovate"],

|

||||

"assignees": [

|

||||

"@magiccc"

|

||||

],

|

||||

"baseBranches": ["staging"],

|

||||

"enabledManagers": ["github-actions", "regex", "docker-compose"],

|

||||

"ignorePaths": [

|

||||

"data\/web\/inc\/lib\/vendor\/**"

|

||||

],

|

||||

"regexManagers": [

|

||||

{

|

||||

"fileMatch": ["^helper-scripts\/nextcloud.sh$"],

|

||||

"matchStrings": [

|

||||

"#\\srenovate:\\sdatasource=(?<datasource>.*?) depName=(?<depName>.*?)( versioning=(?<versioning>.*?))?( extractVersion=(?<extractVersion>.*?))?\\s.*?_VERSION=(?<currentValue>.*)"

|

||||

]

|

||||

},

|

||||

{

|

||||

"fileMatch": ["(^|/)Dockerfile[^/]*$"],

|

||||

"matchStrings": [

|

||||

"#\\srenovate:\\sdatasource=(?<datasource>.*?) depName=(?<depName>.*?)( versioning=(?<versioning>.*?))?( extractVersion=(?<extractVersion>.*?))?\\s(ENV|ARG) .*?_VERSION=(?<currentValue>.*)\\s"

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

BIN

.github/workflows/assets/check_prs_if_on_staging.png

vendored

Normal file

BIN

.github/workflows/assets/check_prs_if_on_staging.png

vendored

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 71 KiB |

33

.github/workflows/check_prs_if_on_staging.yml

vendored

Normal file

33

.github/workflows/check_prs_if_on_staging.yml

vendored

Normal file

@@ -0,0 +1,33 @@

|

||||

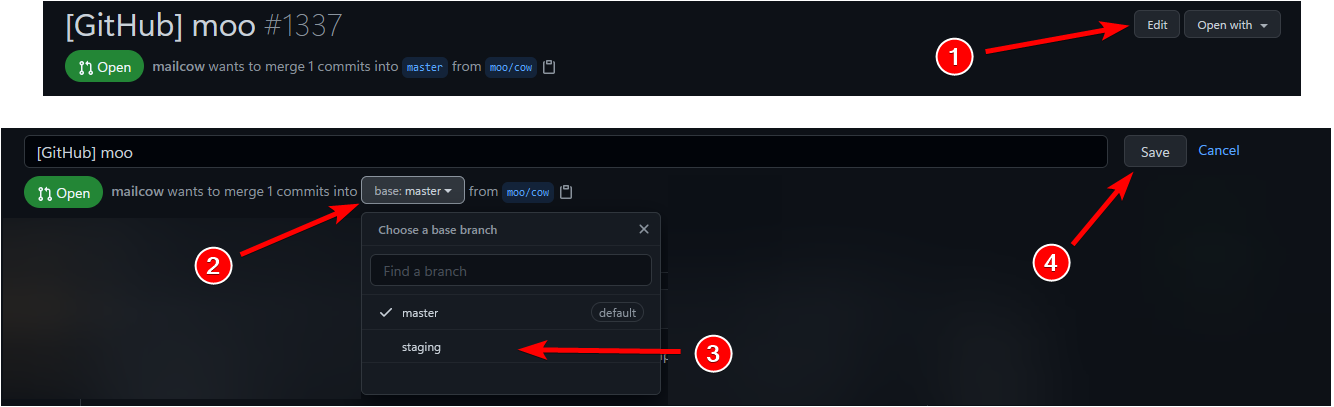

name: Check PRs if on staging

|

||||

on:

|

||||

pull_request_target:

|

||||

types: [opened, edited]

|

||||

permissions: {}

|

||||

|

||||

jobs:

|

||||

is_not_staging:

|

||||

runs-on: ubuntu-latest

|

||||

if: github.event.pull_request.base.ref != 'staging' #check if the target branch is not staging

|

||||

steps:

|

||||

- name: Send message

|

||||

uses: thollander/actions-comment-pull-request@v2.4.3

|

||||

with:

|

||||

GITHUB_TOKEN: ${{ secrets.CHECKIFPRISSTAGING_ACTION_PAT }}

|

||||

message: |

|

||||

Thanks for contributing!

|

||||

|

||||

I noticed that you didn't select `staging` as your base branch. Please change the base branch to `staging`.

|

||||

See the attached picture on how to change the base branch to `staging`:

|

||||

|

||||

|

||||

|

||||

- name: Fail #we want to see failed checks in the PR

|

||||

if: ${{ success() }} #set exit code to 1 even if commenting somehow failed

|

||||

run: exit 1

|

||||

|

||||

is_staging:

|

||||

runs-on: ubuntu-latest

|

||||

if: github.event.pull_request.base.ref == 'staging' #check if the target branch is staging

|

||||

steps:

|